Error function

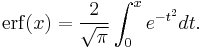

In mathematics, the error function (also called the Gauss error function) is a special function (non-elementary) of sigmoid shape which occurs in probability, statistics and partial differential equations. It is defined as:

The complementary error function, denoted erfc, is defined as

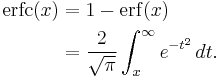

The imaginary error function, denoted erfi, is defined as

The complex error function, denoted w(x) and also known as the Faddeeva function, is defined as

Contents |

The name "error function"

The error function is used in measurement theory (using probability and statistics), and although its use in other branches of mathematics has nothing to do with the characterization of measurement errors, the name has stuck.

The error function is the integral of the Gaussian function curve (the "bell curve" or "normal distribution"). As a result, the error function gives the probability that a measurement, under the influence of accidental errors, has a distance less than x from the average value at the center. This function is used in statistics to predict behavior of any sample with respect to the population mean. This usage is similar to the Q-function, which in fact can be written in terms of the error function.

Properties

Plots in the complex plane

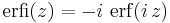

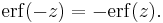

The error function is odd:

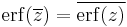

Also, for any complex number z:

where  is the complex conjugate of z.

is the complex conjugate of z.

The integrand ƒ = exp(−z2) and ƒ = erf(z) are shown in the complex z-plane in figures 2 and 3. Level of Im(ƒ) = 0 is shown with a thick green line. Negative integer values of Im(ƒ) are shown with thick red lines. Positive integer values of  are shown with thick blue lines. Intermediate levels of Im(ƒ) = constant are shown with thin green lines. Intermediate levels of Re(ƒ) = constant are shown with thin red lines for negative values and with thin blue lines for positive values.

are shown with thick blue lines. Intermediate levels of Im(ƒ) = constant are shown with thin green lines. Intermediate levels of Re(ƒ) = constant are shown with thin red lines for negative values and with thin blue lines for positive values.

At the real axis, erf(z) approaches unity at z → +∞ and −1 at z → −∞. At the imaginary axis, it tends to ±i∞.

Taylor series

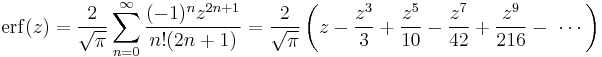

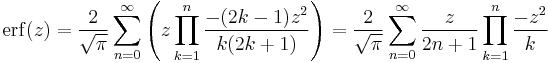

The error function is an entire function; it has no singularities (except that at infinity) and its Taylor expansion always converges.

The defining integral cannot be evaluated in closed form in terms of elementary functions, but by expanding the integrand e−z2 into its Taylor series and integrating term by term, one obtains the error function's Taylor series as:

which holds for every complex number z. The denominator terms are sequence A007680 in the OEIS.

For iterative calculation of the above series, the following alternative formulation may be useful:

because  expresses the multiplier to turn the kth term into the (k + 1)th term (considering z as the first term).

expresses the multiplier to turn the kth term into the (k + 1)th term (considering z as the first term).

The error function at +∞ is exactly 1 (see Gaussian integral).

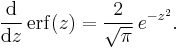

The derivative of the error function follows immediately from its definition:

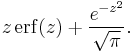

An antiderivative of the error function is

Inverse functions

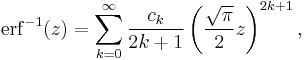

The inverse error function can be defined in terms of the Maclaurin series

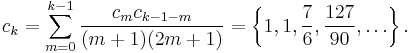

where c0 = 1 and

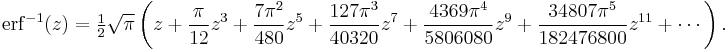

So we have the series expansion (note that common factors have been canceled from numerators and denominators):

(After cancellation the numerator/denominator fractions are entries A092676/A132467 in the OEIS; without cancellation the numerator terms are given in entry A002067.) Note that the error function's value at ±∞ is equal to ±1.

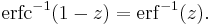

The inverse complementary error function is defined as

Asymptotic expansion

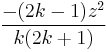

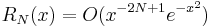

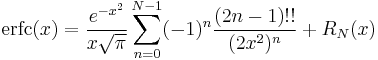

A useful asymptotic expansion of the complementary error function (and therefore also of the error function) for large x is

This series diverges for every finite x, and its meaning as asymptotic expansion is that, for any  one has

one has

where the remainder, in Landau notation, is

as

as  .

.

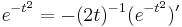

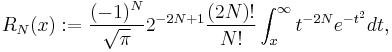

Indeed, the exact value of the remainder is

which follows easily by induction, writing  and integrating by parts.

and integrating by parts.

For large enough values of x, only the first few terms of this asymptotic expansion are needed to obtain a good approximation of erfc(x) (while for not too large values of x note that the above Taylor expansion at 0 provides a very fast convergence).

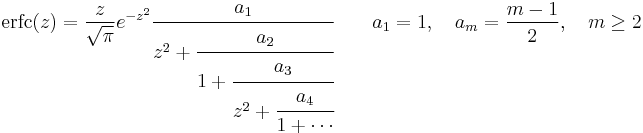

Continued fractions expansion

A continued fractions expansion of the complementary error function is [1]:

Approximation with elementary functions

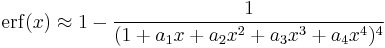

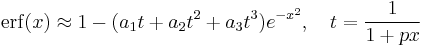

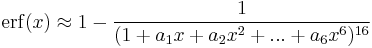

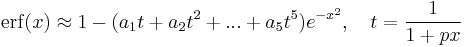

Abramowitz and Stegun give several approximations of varying accuracy (equations 7.1.25-28). This allows one to choose the fastest approximation suitable for a given application. In order of increasing accuracy, they are:

(maximum error: 5·10-4)

(maximum error: 5·10-4)

where a1=0.278393, a2=0.230389, a3=0.000972, a4=0.078108

(maximum error: 2.5·10-5)

(maximum error: 2.5·10-5)

where p=0.47047, a1=0.3480242, a2=-0.0958798, a3=0.7478556

(maximum error: 3·10-7)

(maximum error: 3·10-7)

where a1=0.0705230784, a2=0.0422820123, a3=0.0092705272, a4=0.0001520143, a5=0.0002765672, a6=0.0000430638

(maximum error: 1.5·10-7)

(maximum error: 1.5·10-7)

where p=0.3275911, a1=0.254829592, a2=-0.284496736, a3=1.421413741, a4=-1.453152027, a5=1.061405429

All of these approximations are valid for x≥0. To use these approximations for negative x, use the fact that erf(x) is an odd function, so erf(x)=-erf(-x).

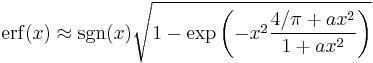

Another approximation is given by

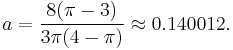

where

This is designed to be very accurate in a neighborhood of 0 and a neighborhood of infinity, and the error is less than 0.00035 for all x. Using the alternate value a ≈ 0.147 reduces the maximum error to about 0.00012.[2]

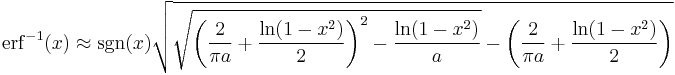

This approximation can also be inverted to calculate the inverse error function:

Applications

When the results of a series of measurements are described by a normal distribution with standard deviation  and expected value 0, then

and expected value 0, then  is the probability that the error of a single measurement lies between −a and +a, for positive a. This is useful, for example, in determining the bit error rate of a digital communication system.

is the probability that the error of a single measurement lies between −a and +a, for positive a. This is useful, for example, in determining the bit error rate of a digital communication system.

The error and complementary error functions occur, for example, in solutions of the heat equation when boundary conditions are given by the Heaviside step function.

Related functions

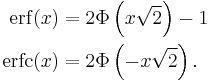

The error function is essentially identical to the standard normal cumulative distribution function, denoted Φ, also named norm(x) by software languages, as they differ only by scaling and translation. Indeed,

or rearranged for erf and erfc:

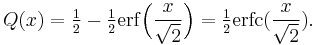

Consequently, the error function is also closely related to the Q-function, which is the tail probability of the standard normal distribution. The Q-function can be expressed in terms of the error function as

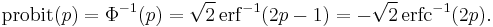

The inverse of  is known as the normal quantile function, or probit function and may be expressed in terms of the inverse error function as

is known as the normal quantile function, or probit function and may be expressed in terms of the inverse error function as

The standard normal cdf is used more often in probability and statistics, and the error function is used more often in other branches of mathematics.

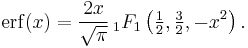

The error function is a special case of the Mittag-Leffler function, and can also be expressed as a confluent hypergeometric function (Kummer's function):

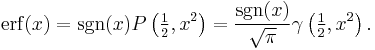

It has a simple expression in terms of the Fresnel integral. In terms of the Regularized Gamma function P and the incomplete gamma function,

is the sign function.

is the sign function.

Generalized error functions

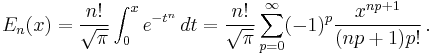

Some authors discuss the more general functions:

Notable cases are:

- E0(x) is a straight line through the origin:

- E2(x) is the error function, erf(x).

After division by n!, all the En for odd n look similar (but not identical) to each other. Similarly, the En for even n look similar (but not identical) to each other after a simple division by n!. All generalised error functions for n > 0 look similar on the positive x side of the graph.

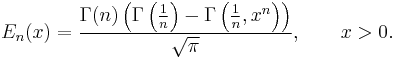

These generalised functions can equivalently be expressed for x > 0 using the Gamma function and incomplete Gamma function:

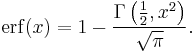

Therefore, we can define the error function in terms of the incomplete Gamma function:

Iterated integrals of the complementary error function

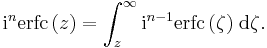

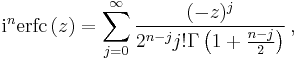

The iterated integrals of the complementary error function are defined by

They have the power series

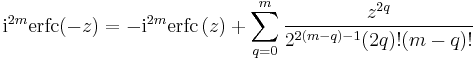

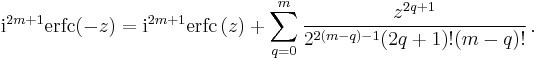

from which follow the symmetry properties

and

Implementations

- C: C99 provides the functions double erf(double x) and double erfc(double x) in the header math.h. The pairs of functions {erff(),erfcf()} and {erfl(),erfcl()} take and return values of type float and long double respectively.

- C++: C++11 provides erf() and erfc() in the header cmath. Both functions are overloaded to accept arguments of type float, double, and long double.

- Fortran: The Fortran 2008 standard provides the ERF, ERFC and ERFC_SCALED functions to calculate the error function and its complement.

- Python: Included since version 2.7 as

math.erf(). For previous version, an implementation of erf for complex arguments is in SciPy asscipy.special.erf()[3] and also in the arbitrary-precision arithmetic mpmath library asmpmath.erf()

- Mathematica: erf is implemented as Erf and Erfc in Mathematica

- Haskell (programming language): An erf package[4] exists that provides a typeclass for the error function and implementations for the native floating point types

- R: "The so-called 'error function'"[5] is not provided directly, but is detailed as an example of the normal cumulative distribution function (

?pnorm), which is based on W. J. Cody's rational Chebyshev approximation algorithm.[6]

- Matlab provides both erf and erfc, also via W. J. Cody's algorithm.

- Ruby: Provides

Math.erf()andMath.erfc().

Table of values

-

x erf(x) erfc(x) x erf(x) erfc(x) 0.00 0.0000000 1.0000000 1.30 0.9340079 0.0659921 0.05 0.0563720 0.9436280 1.40 0.9522851 0.0477149 0.10 0.1124629 0.8875371 1.50 0.9661051 0.0338949 0.15 0.1679960 0.8320040 1.60 0.9763484 0.0236516 0.20 0.2227026 0.7772974 1.70 0.9837905 0.0162095 0.25 0.2763264 0.7236736 1.80 0.9890905 0.0109095 0.30 0.3286268 0.6713732 1.90 0.9927904 0.0072096 0.35 0.3793821 0.6206179 2.00 0.9953223 0.0046777 0.40 0.4283924 0.5716076 2.10 0.9970205 0.0029795 0.45 0.4754817 0.5245183 2.20 0.9981372 0.0018628 0.50 0.5204999 0.4795001 2.30 0.9988568 0.0011432 0.55 0.5633234 0.4366766 2.40 0.9993115 0.0006885 0.60 0.6038561 0.3961439 2.50 0.9995930 0.0004070 0.65 0.6420293 0.3579707 2.60 0.9997640 0.0002360 0.70 0.6778012 0.3221988 2.70 0.9998657 0.0001343 0.75 0.7111556 0.2888444 2.80 0.9999250 0.0000750 0.80 0.7421010 0.2578990 2.90 0.9999589 0.0000411 0.85 0.7706681 0.2293319 3.00 0.9999779 0.0000221 0.90 0.7969082 0.2030918 3.10 0.9999884 0.0000116 0.95 0.8208908 0.1791092 3.20 0.9999940 0.0000060 1.00 0.8427008 0.1572992 3.30 0.9999969 0.0000031 1.10 0.8802051 0.1197949 3.40 0.9999985 0.0000015 1.20 0.9103140 0.0896860 3.50 0.9999993 0.0000007

-

x erfc(x)/2 1 0.0786496 2 0.00233887 3 1.10452e-05 4 7.70863e-09 5 7.6873e-13 6 1.07599e-17 7 2.09191e-23 8 5.61215e-30 9 2.06852e-37 10 1.04424e-45 11 7.20433e-55 12 6.78131e-65 13 8.69779e-76 14 1.51861e-87 15 3.6065e-100 16 1.16424e-113 17 5.10614e-128 18 3.04118e-143 19 2.45886e-159 20 2.69793e-176 21 4.01623e-194 22 8.10953e-213 23 2.22063e-232 24 8.24491e-253 25 4.15009e-274 26 2.8316e-296 27 2.61855e-319

See also

Related functions

- Gaussian integral, over the whole real line

- Gaussian function, derivative

- Dawson function, renormalized imaginary error function

In probability

- Normal distribution

- Normal cumulative distribution function, a scaled and shifted form of error function

- Probit, the inverse or quantile function of the normal CDF

- Q-function, the tail probability of the normal distribution

References

- ^ Cuyt, A.A.M.; Petersen, V.; Verdonk, B.; Waadeland, H.; Jones, W.B. (2008). Handbook of Continued Fractions for Special Functions. Springer-Verlag. ISBN 978-1-4020-6948-2.

- ^ Winitzki, Sergei (6 February 2008). "A handy approximation for the error function and its inverse" (PDF). http://sites.google.com/site/winitzki/sergei-winitzkis-files/erf-approx.pdf. Retrieved 2011-10-03.

- ^ http://docs.scipy.org/doc/scipy/reference/generated/scipy.special.erf.html

- ^ http://hackage.haskell.org/package/erf

- ^ R Development Core Team (2011-02-25), R: The Normal Distribution, http://stat.ethz.ch/R-manual/R-patched/library/stats/html/Normal.html

- ^ Cody, W. J. (1969). "Rational Chebyshev Approximations for the Error Function". Math. Comp. 23 (107): 631–637. http://www.ams.org/journals/mcom/1969-23-107/S0025-5718-1969-0247736-4/S0025-5718-1969-0247736-4.pdf.

- Abramowitz, Milton; Stegun, Irene A., eds. (1965), "Chapter 7", Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables, New York: Dover, pp. 297, ISBN 978-0486612720, MR0167642, http://www.math.sfu.ca/~cbm/aands/page_297.htm.

- Press, WH; Teukolsky, SA; Vetterling, WT; Flannery, BP (2007), "Section 6.2. Incomplete Gamma Function and Error Function", Numerical Recipes: The Art of Scientific Computing (3rd ed.), New York: Cambridge University Press, ISBN 978-0-521-88068-8, http://apps.nrbook.com/empanel/index.html#pg=259

- Temme, N. M. (2010), "Error Functions, Dawson’s and Fresnel Integrals", in Olver, Frank W. J.; Lozier, Daniel M.; Boisvert, Ronald F. et al., NIST Handbook of Mathematical Functions, Cambridge University Press, ISBN 978-0521192255, MR2723248, http://dlmf.nist.gov/7

External links

/sub>=-1.453152027, a

![w(x) = e^{-x^2}{\operatorname{erfc}}(-ix) = e^{-x^2}[1%2Bi\,\,\operatorname{erfi}(x)]](/2012-wikipedia_en_all_nopic_01_2012/I/e5ee605bd5f57ae3ad08aff8d5d2bfe2.png)

![\mathrm{erfc}(x) = \frac{e^{-x^2}}{x\sqrt{\pi}}\left [1%2B\sum_{n=1}^\infty (-1)^n \frac{1\cdot3\cdot5\cdots(2n-1)}{(2x^2)^n}\right ]=\frac{e^{-x^2}}{x\sqrt{\pi}}\sum_{n=0}^\infty (-1)^n \frac{(2n-1)!!}{(2x^2)^n}.\,](/2012-wikipedia_en_all_nopic_01_2012/I/cf5609eeddff870c87d8c342946e362c.png)

![\Phi(x) =\tfrac{1}{\sqrt{2\pi}}\int_{-\infty}^x e^\tfrac{-t^2}{2} dt = \tfrac{1}{2}\left[1%2B\mbox{erf}\left(\frac{x}{\sqrt{2}}\right)\right]=\tfrac{1}{2}\,\mbox{erfc}\left(-\frac{x}{\sqrt{2}}\right)](/2012-wikipedia_en_all_nopic_01_2012/I/9ed95e8de31c0ce38caa304876dbc4f5.png)